Teaching AI to Speak in Human Concepts

Teaching AI to Speak in Human Concepts

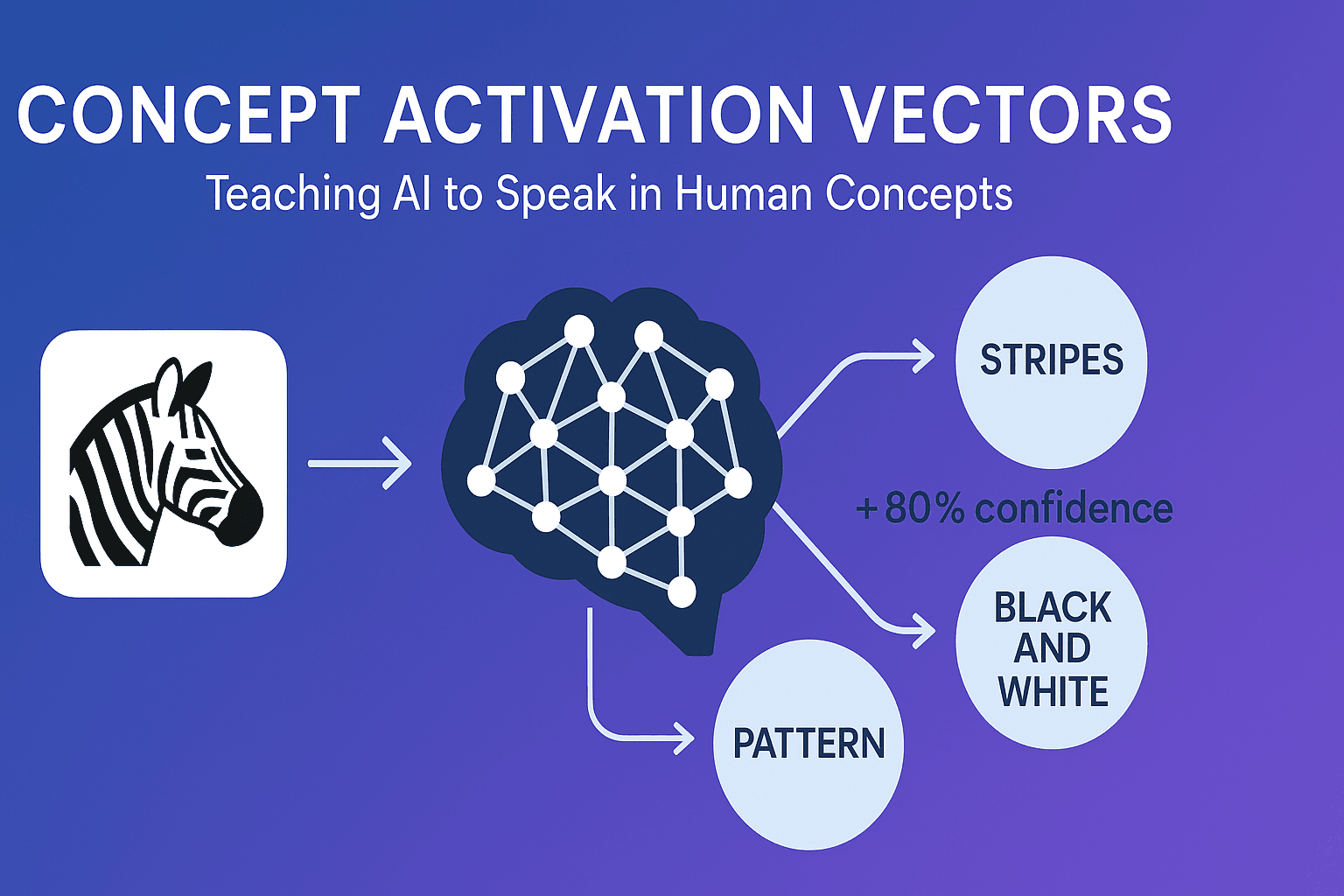

In image processing, AI models often feel like masterminds with no language skills — they know what they see but can’t explain it in terms we understand. This is where Concept Activation Vectors (TCAV) come in, acting as interpreters that connect abstract neural patterns to concepts humans can relate to, like “stripes,” “wheels,” or “cloudy skies”.

What Are Concept Activation Vectors (TCAV)?

Concept Activation Vectors are a method to measure how much a high‑level, human‑understandable concept influences a model’s prediction. Developed by researchers at Google, TCAV calculates directional derivatives in the model’s latent space to determine the sensitivity of its decisions to a concept you define in advance.

For example, a model distinguishing zebras from horses might actually focus on “stripes” rather than shape or context. By defining “stripes” via several sample images, TCAV can tell you if that concept is driving the classification — and by how much.

Why Is This Important?

- Transparency: It transforms pixel blobs into clear, named concepts.

- Bias Detection: Helps spot when a model overrelies on irrelevant or unfair features.

- Domain Control: Enables non‑technical stakeholders (designers, medical experts, etc.) to understand and critique model reasoning.

How Does TCAV Work?

- Concept Definition: Provide labeled examples for a concept (e.g., “stripes”).

- Activation Extraction: Run these examples through the network and capture neuron activations in a chosen layer.

- Vector Learning: Train a simple classifier in this activation space to differentiate the concept from random images.

- Directional Sensitivity: Measure how the model’s prediction changes when activations move in the “concept” direction.

This tells us whether the model is concept‑driven, and how much the decision depends on it.

Applications in Image Processing

- Medical Imaging: Evaluate if a tumor detection model fixates on a clinically relevant region instead of irrelevant markers like patient nameplates.

- Creative Design: Control generated images by quantifying and adjusting features like “retro style” or “symmetry.”

Bias Auditing: See if “skin tone” influences face recognition more than it should.

Future of Concept‑Based Explainability

While pixel‑level explanations (like Grad‑CAM) are helpful, they often require expert interpretation. TCAV shifts the conversation — letting us speak the model’s “language” using our own words. In the long run, this could empower scientists, doctors, and artists to collaborate with AI systems instead of merely auditing them.

When AI starts speaking in concepts, it’s like getting subtitles for a complex foreign film — you not only watch the story unfold but also understand it. And that’s the kind of transparency we need for trustworthy AI.