Diffusion-Driven Counterfactuals for Video AI

Diffusion-Driven Counterfactuals for Video AI

Big idea: make tiny, realistic edits to a video—like softening a micro-expression, nudging a wrist angle, or delaying a contact—and see if the AI changes its mind. If that tiny change flips the result, you’ve found a counterfactual: a clear, visual answer to “what really drove this prediction?”

Why this matters

Clarity you can watch: Instead of a heatmap, you get an A/B clip: original vs. minimally edited. It’s instantly clear which moment or motion mattered.

Debugging superpower: If a model flips just because a background logo was blurred, you’ve discovered a shortcut your model relied on.

Trust & accountability: For stakeholders, a short clip that shows “this tiny change flipped the decision” is easier to understand than charts or equations.

How it works

Simply put diffusion models are modern generators that can make or edit realistic video. We use them to make small, smooth edits to the original clip—keeping everything else the same. The goal is to change as little as possible until the model’s answer flips. That way, you isolate the most important cue. Think of it like gently tweaking a single domino in a long chain and seeing whether the cascade still falls. If flipping that one domino changes the outcome, that’s your reason.

What you actually see

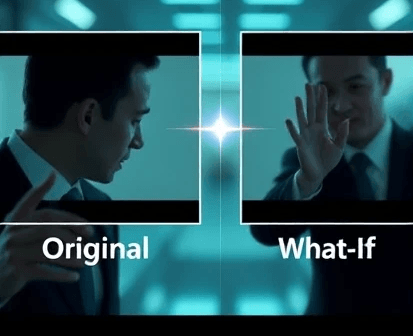

A short, side-by-side: Original vs. “What-if”.

The “what-if” looks natural across frames (no flicker or jump cuts), and only the suspected cue is different—like a hand not quite touching an object, or an expression that’s slightly softer.

How to judge a good counterfactual

Did it flip? If the model’s answer didn’t change, the edit isn’t explaining much.

Was the change small? Good counterfactuals don’t rewrite the scene; they tweak one meaningful cue.

Does it look real over time? The edit should feel like a plausible video, not a glitchy GIF.

Would a human agree? When people watch both clips, does the edit match their intuition about what would change the label?

Where this helps right now

Product analytics & safety: Spot fragile behaviors (e.g., model over-weights a brand mark or lighting change).

Model iteration: Turn counterfactuals into better training data—teach models not to rely on the wrong cues.

Compliance & audit trails: Clear visual evidence for why a prediction changed.

If you’re thinking about bringing explainability into real-world workflows, browsing the Prexable Blog can spark ideas on how to present insights so teams actually act on them.

Want the research flavor?

Diffusion-driven counterfactuals turn “Why did the model say that?” into a clear, visual story. With tiny, realistic edits that cause a flip, you learn what truly mattered—so you can debug faster, communicate better, and ship models people trust. If you enjoy peeking under the hood, here’s a research-style overview that inspired this post and explores how to balance faithfulness (did we flip the model for the right reason?) and realism (does the video still look natural):

Paper draft: https://arxiv.org/html/2509.08422v1