When Machines Learn to Read Faces: How Expression Recognition Is Helping Autism Patients Connect

When Machines Learn to Read Faces: How Expression Recognition Is Helping Autism Patients Connect

For centuries, humans have learned to read emotions by watching each other’s faces, a frown of worry, a smile of joy, an eyebrow raised in surprise. It’s one of the oldest, most instinctive languages on Earth.

Now, machines are learning to read that language too.

Facial expression recognition models are teaching computers to interpret human emotion from a simple image or video frame. And beyond all the science, something beautiful is happening: these systems are beginning to help people understand each other better. Especially people who, for neurological reasons, have always found that emotional language difficult to read, individuals on the autism spectrum.

The Science of Facial Expression Recognition

At its heart, facial expression recognition is a form of computer vision, a way for machines to see and understand faces the way we do.

Here’s how it works, in human terms:

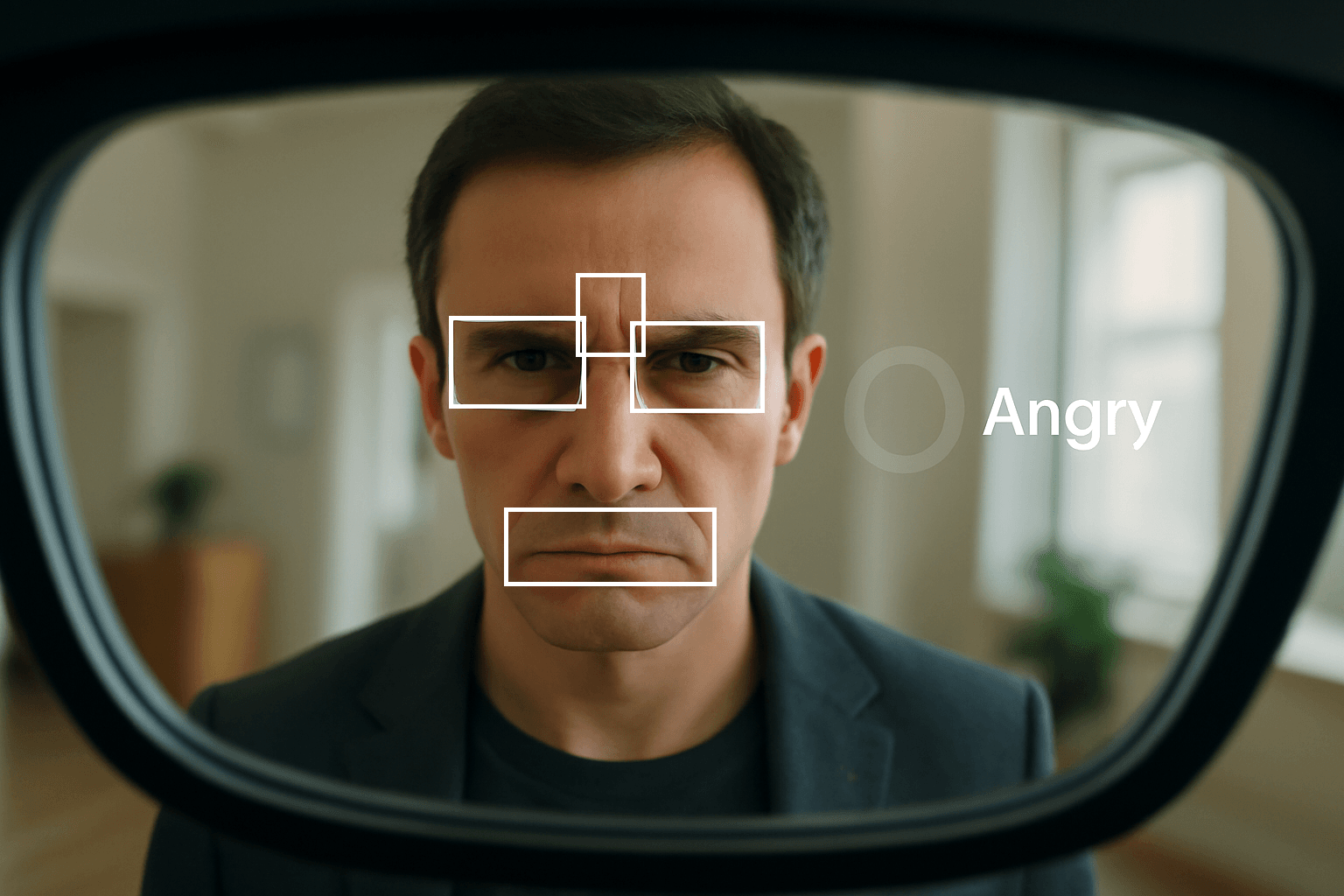

- Detection: The model first identifies that a face is present in the camera’s view.

- Landmarking: It maps key points: eyes, eyebrows, nose, mouth, and jawline.

- Feature extraction: Subtle movements in those points are analyzed: a smile curves the lips, a frown lowers the eyebrows, surprise widens the eyes.

- Emotion classification: The system compares those patterns to thousands of known examples, then predicts an emotion: happy, sad, surprised, angry, neutral, and so on.

Under the hood, it’s powered by deep learning models, typically convolutional neural networks (CNNs) or transformer-based architectures, trained on vast datasets of human expressions.

But what’s most impressive is that these models are becoming emotionally fluent across different faces, cultures, and lighting conditions. Some can even detect microexpressions, fleeting emotional clues that last less than half a second and are invisible to most humans.

Where We See It Already

You’ve probably encountered facial expression recognition without realizing it.

It’s used in:

Photo apps that group images by smiles or moods.

- Video games that respond to a player’s emotions.

- Customer service AI that detects frustration in live video chats.

Healthcare systems that assess pain or stress from a patient’s face.

But one of the most powerful uses is happening in a place few expected: helping people with autism better navigate the world of human emotion.

When AI Becomes an Emotional Coach

For many individuals on the autism spectrum, facial expressions can feel like a foreign alphabet, full of signals that are hard to decode. A smile might not always mean happiness. A furrowed brow might go unnoticed.

That’s where facial expression recognition models, embedded in wearable technology, are making a quiet revolution.

Smart Glasses That See and Explain

Researchers at MIT’s Media Lab, Stanford University, and several startups around the world are developing AI-powered smart glasses that can recognize facial expressions in real time.

Here’s how they work:

The glasses have a small camera facing outward.

- The camera captures the face of the person the wearer is talking to.

- An onboard AI model analyzes the person’s expressions, using facial landmarks and deep learning algorithms.

Through a gentle cue (like a color change in the lens, a vibration, or an earpiece whisper), the glasses tell the wearer what emotion they’re seeing.

It’s like having a friendly coach whispering,

“She’s smiling, she’s happy,”

“He looks puzzled, maybe explain again,”

“They seem uncomfortable, give them space.”

These devices don’t replace empathy, they support it. They turn the invisible world of facial emotion into something tangible and teachable.

Helping Autism Patients Communicate with Confidence

For children and adults with autism, these tools can be life-changing.

Many people on the spectrum find social interactions stressful because they miss subtle emotional signals. Smart glasses using facial expression recognition give them real-time feedback, helping them understand and respond appropriately in conversations.

Therapists report that after consistent use, some users begin to recognize emotions without the device, the AI acts as a bridge to build natural social intuition.

It’s therapy through technology, not replacing human connection, but training it gently, consistently, and with compassion.

The Ethical Balance

Of course, as with any technology involving cameras and emotions, privacy is a crucial issue.

Developers are working hard to ensure these systems process data locally (on the device) and avoid storing or transmitting sensitive facial information. The goal isn’t surveillance, it’s empowerment.

The Future: Empathy Powered by AI

Facial expression recognition began as a technological challenge: can a machine read a face? But it’s evolving into something far more human: can a machine help us understand each other better?

From smart glasses for autism support to emotional robots in therapy and education, the future of AI in this space isn’t about replacing empathy, it’s about extending it.

For a child who’s always felt lost in a room full of unspoken emotions, a tiny device that gently says, “He’s happy to see you,” isn’t just a gadget.

It’s a lifeline.

When technology learns to read faces, it’s not about machines getting smarter, it’s about humans connecting more deeply. And for people with autism, face expression recognition is opening doors to a world that once seemed hard to read: one smile, one glance, one connection at a time.